News:

- 21, Feb. One paper is accepted to CVPR 2026 (Findings)

- 7, Feb. Serve as AE for IROS 2026

- 31, Jan. One paper is accepted to ICRA 2026 in Alias-free Gaussian Splatting SLAM.

- 23, Jan. Serve as Session Co-Chair for 3D Computer Vision 1&2 for AAAI-26

📅 2025

- 8, Nov. Two Oral papers are accepted to AAAI 2026 in RiemanLine Parametrization and Active3D. [Source Code|Paper] of RiemanLine, [Project Page] of Active3D.

- 26, Oct. Chaired the Online Session 3 – Digital Image Processing and Applications at IEEE ICRCV.

- 11, Oct. Appointed as an Associate Editor of IEEE Robotics and Automation Letters (RA-L). Looking forward to working with the great editorial board and contributing to the robotics community!

- 19, Sep. One paper is accepted to NeurIPS 2025: 4D3R: Motion-Aware Neural Reconstruction and Rendering of Dynamic Scenes from Monocular Videos.

- 18, Aug. The review process of IROS2025 Workshop AIR4D was completed. Looking forward to seeing you in Hangzhou!

- 6, Aug. Our New arXiv paper: Riemannian Manifold Representation of 3D Lines for Factor Graph Optimization. [Paper | Code] can be obtained.

- 27, June. Three papers are accepted to ICCV 2025 in 4D SLAM; Scene Graph Prediction; Autonomous Driving Gaussian Splatting.

- 25, June. One paper is accepted to Computers & Graphics.

- Invited to give a talk at Tianjin University on June 3, 2025: Accurate Localization and Scene Reconstruction in 4D Space.

- 9, May. We're excited to announce that the 1st Workshop on Advancements for Intelligent Robotics in 4D Scenes: Localization, Reconstruction, Rendering, and Generation will be held in IROS 2025 (Hangzhou, China): Website

- 15, April. Our paper: SE (3)-Equivariance Learning for Category-level Object Pose Estimation (Paper) is accepted to IEEE TIM 2025.

- 20, March. Our new arXiv paper: 4D Gaussian Splatting SLAM (Paper) can be obtained.

- 11, March. Our new arXiv paper: Robust Surface Reconstruction (Paper) can be obtained.

- 26, Fed. Our paper: Learnable Infinite Taylor Gaussian for Dynamic View Rendering (Paper) is accepted to CVPR2025.

- 28, Jan. Our paper: Lifelong Localization using Adaptive Submap Joining and Egocentric Factor Graph (Paper&Code) is accepted to ICRA2025.

- 13, Jan. Our new paper is accepted to IEEE Sensors Journal

📅 2024

- Our new paper, ShapeMaker, is accepted to CVPR2024.

- Our New arXiv paper (28.Nov.2024): SmileSplat: Generalizable Gaussian Splats for Unconstrained Sparse Images (Project) can be obtained.

-

Our Paper (27.Nov.2024) got accepted for publication in IEEE TIM:

- Accurate Visual Odometry by Leveraging 2DoF Bearing-Only Measurements

- Our New arXiv paper (01.Oct.2024): Robust Gaussian Splatting SLAM by Leveraging Loop Closure (Paper) can be obtained.

- Our new paper, Open-Structure (Paper | Code), is accepted to RA-L2024.

- Our new paper, GeoGaussian (Project Website), is accepted to ECCV2024.

- I joined the CVRP group at NU Singapore as a Postdoc Research Fellow advised by Prof. Gim Hee Lee from June, 2024.

Selected Publications (Google Scholar, GitHub):

*Equal contribution †Corresponding author

Name Update: I previously published under the name Yanyan Li.

All works under that name refer to the same author.

-

Active3D: Active High-Fidelity 3D Reconstruction via Hierarchical Uncertainty Quantification

- Yan Li, Yingzhao Li, Gim Hee Lee. @ AAAI 2026 (Oral)

- [Project Page]

-

RiemanLine: Riemannian Manifold Representation of 3D Lines for Factor Graph Optimization

- Yan Li, Ze Yang, Keisuke Tateno, Federico Tombari, Liang Zhao, Gim Hee Lee. @ AAAI 2026 (Oral)

- [Paper] [Code]

-

4D Gaussian Splatting SLAM

- Yanyan Li, Youxu Fang, Zunjie Zhu, Kunyi Li, Yong Ding, Federico Tombari. @ ICCV 2025

- [Paper] [Project Page] [Code]

-

Learnable Infinite Taylor Gaussian for Dynamic View Rendering

- Bingbing Hu*, Yanyan Li*, Rui Xie, Bo Xu, Haoye Dong, Junfeng Yao, Gim Hee Lee. @ CVPR 2025

- [Paper] [Project Page] [Code]

-

Robust Gaussian Splatting SLAM by Leveraging Loop Closure

- Zunjie Zhu, Youxu Fang , Xin Li , Chenggang Yan , Feng Xu, Chau Yuen, Yanyan Li†. @ arXiv 2024

- [Paper] [Project Page] [Code Coming Soon]

-

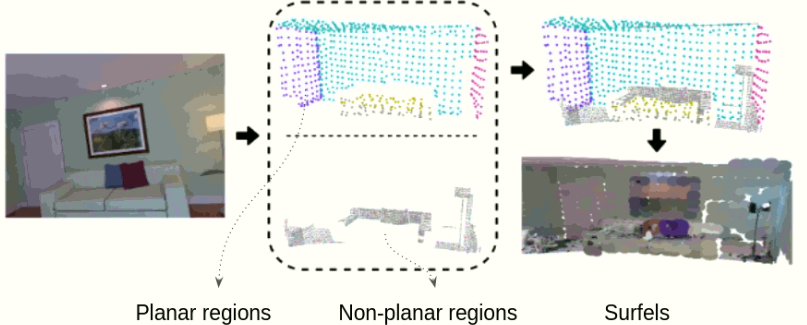

GeoGaussian: Geometry-aware Gaussian Splatting for Scene Rendering

- Yanyan Li, Chenyu Lyu, Yan Di, Guangyao Zhai, Gim Hee Lee, Federico Tombari. @ ECCV 2024

- [Paper] [Project Page] [Code]

-

Open-Structure: a Structural Benchmark Dataset for SLAM Algorithms

- Yanyan Li, Zhao Guo, Ze Yang, Yanbiao Sun, Liang Zhao, Federico Tombari. @ IEEE RA-L 2024

- [Paper] [Project Page] [Code]

-

E-Graph: Minimal Solution for Rigid Rotation with Extensibility Graphs

- Yanyan Li and Federico Tombari.

- @ ECCV 2022

- [Paper] [video]

-

ManhattanSLAM: Robust Planar Tracking and Mapping Leveraging Mixture of Manhattan Frames

- Raza Yunus, Yanyan Li†, Federico Tombari. @ ICRA 2021

- [Paper] [Code]

-

RGB-D SLAM with Structural Regularities

- Yanyan Li, Raza Yunus, Nikolas Brasch, Nassir Navab, Federico Tombari. @ ICRA 2021

- [Paper] [Code]

-

Structure-SLAM: Low-drift Monocular SLAM in Indoor Environments

- Yanyan Li, Nikolas Brasch, Yida Wang, Nassir Navab, Federico Tombari. @ IEEE RAL/IROS 2020

- [Paper] [Code]